Data analysts wear a lot of hats, and the first ones to join a team may wear some extra ones. In doing so, they’ll have soaked up a remarkable amount of information by the time the team grows.

We’re lucky to have such excellent foundations of knowledge, but at what point does all that siloed knowledge become a liability? A hypothetical to demonstrate why: what happens if they win the lottery next week and resign? We may find ourselves scrambling trying to interpret their work, unprepared for certain situations, or even locked out of accounts.

What is the lottery factor?

Software engineering teams define the lottery factor (aka bus factor) as “the minimum number of team members that have to suddenly disappear from a project before the project stalls due to lack of knowledgeable or competent personnel.”

Similar to engineering teams, data teams run the risk of tech failures. If only one person knows how to maintain ETL pipelines or Airflow jobs, even a vacation day could lead your team to being out of luck in a breakage. Knowledge of overall system design comes into play, too — if data looks wrong on dashboards, you need at least one person online who knows how that data point gets from the data source to the dashboard.

However, a lot of the siloed knowledge in data teams comes not from how to build something in progress, but rather existing systems, historical analyses, decisions, and events. If one person from the data team defined a key metric or designed a dashboard, they accumulated a lot of context in that process. Additionally, more tenure will often come with an encyclopedic knowledge of specific events, feature releases, or other changes that produced remnant effects in the data, or who the best cross-functional partner is to answer specific questions.

So while it’s great that our first team members know a ton, it’s a risk to our company that this knowledge is siloed. Ideally, we can spread this knowledge across the team and lessen that risk.

The activities we suggest starting with for small teams continue to add value over time, so if your team is bigger and not doing these things yet, it’s not too late to begin.

First data hire — get a head start on documentation

When you’re a team of one, there’s naturally a lottery factor. While it is unrealistic to eradicate the lottery factor entirely, there are still a few steps you can take to get started. But naturally, all of these are asynchronous. You’re not expecting much of a payoff until you actually bring on that second person. Because of that, both of these options are lightweight, so that you can stay productive in the present.

Transition to more stable credentials

First things first: make sure that your team isn’t using personal credentials for admin accounts to SaaS tools, databases, etc. — things that may break data pipelines or other processes if that person were to leave the company. Using service or shared accounts (ex. analytics@yourcompany.com) where multiple members of the team have the credentials makes it less likely you’ll experience an interruption or lockout.

Be verbose with comments

If you are at this stage, you have enough on your plate as is. We are not going to lecture you on how important it is to build things elegantly or efficiently, or having perfect documentation. You want to get things done, quickly. But it is still possible to balance having some code comments.

Feel free to build as you would, with the simplest solution needed, but ask yourself, “If someone was onboarding without me here, what would they need to know to understand this?” That may be as simple as a few words explaining a quick formula, or some rambling sentences on why you filtered out a sliver of rows.

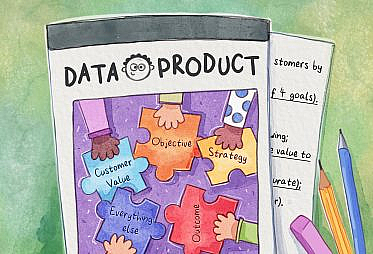

Establish a knowledge base

Whether or not you have consciously thought about it, you probably have a knowledge base. It can be in Google Drive, Confluence, Notion, or many other tools — but a central location for many different pieces of information is a valuable resource. Additionally, the knowledge base has greater and greater utility as more team members join and more notable, documentable things happen. For example, keeping notes from retros or postmortems in your knowledge base enables less-tenured team members to discover context on projects or events that they may not have found otherwise.

The benefit of the knowledge base is contingent upon actually populating it with analyses, style guides, meeting notes, handy queries, and anything else relevant to your team members. Therefore, one thing to prioritize initially is the ease of use in adding new material. If you don’t have a knowledge base, all you need to do to start is create one shared folder or wiki page.

Create a changelog

Michelle Ballen wrote for Locally Optimistic back in 2018 about keeping a log of notable events that may impact your data. Examples may include product launches, marketing promotions, feature releases, press, bugs, and more.

The cost to maintain isn’t much. Even just a date and a quick note about what happened is enough to be a big help to anyone who’s wondering a year into the future why sales spiked on a certain day, or why there were no signups for a three-day span. Having explanations ready goes a long way with your stakeholders when they notice these blips themselves.

The changelog is valuable at any team size, so teams of one or larger would benefit from starting to capture knowledge today. It has been a huge value-add to our team at Future (data team of five, 300 data consumers), where there are frequent feature releases to our app and adjustments to our digital marketing strategy. To make it manageable for you, we recommend to not stress about backfilling, and just start capturing new events.

Two to five team members — onboarding and pairing to spread knowledge

Your team has just doubled in size from one to two members. Congrats! You are excited to have much more capacity once your new teammate is fully up to speed. However, they do not yet have all that muscle memory you have accumulated in your role.

At this stage of growth, you probably don’t yet have the documentation of your dreams built out. This places the burden of knowledge sharing on both of you — your new teammate needs to be proactive in asking questions and reading through existing codebases and data products, and you will need to spend a lot of face time with them demonstrating best practices and providing feedback on their initial work.

Assign scavenger hunts to new team members

Most data practitioners live out of a few tables in the data warehouse and know them well. However, if there are tables that only one or two people on the team work with, you carry some risk. Forcing team members to actually think about a query just once helps build familiarity.

At Dutchie, our team assigned scavenger hunts to address this. It took about 20 minutes to write up, with 10 questions to answer using the BI tool that resembled “using the orders table, calculate last month’s gross merchandise value”, “which customer success manager currently handles the most accounts”, and “which type of support tickets do we see most often”. We gave team members about a week to complete it, and then conducted a screen-share to present results, and provide a venue for them to ask clarifying questions.

Jim Franken, a Senior Data Analyst at Dutchie, found the exercise worthwhile. “The scavenger hunt pushed me to read Looker documentation and familiarize myself with its foundational features,” he said. “It especially helped me learn about dashboards, explores, and how to find specific data to answer business questions.” I have heard of other companies adopting this practice with great success, some extending it with sections for finding specific tables in the database and exploring the team’s data documentation.

Conduct pair programming

Pair programming is when two team members will screenshare or sit at the same computer and work together through a coding exercise. This is especially helpful for ramping up new team members on early-stage teams where you may not have fully-developed style guides or workflows.

Even if the new team members have adequate technical skills, it may take some time to assimilate into your team’s specific workflows and ways of doing things. Providing a venue for them to observe and ask impromptu questions (especially ones they think could be “dumb” and may not ask over message), is a great way to speed up knowledge sharing.

Participate in peer reviews

Whether it’s a pull request on a version-controlled codebase, a draft of a dashboard, or an analysis about to go out, having a peer review process is likely to improve many different types of deliverables. Similar to pair programming, reviewing another’s work promotes two-way knowledge sharing. It also sets aside an opportunity to catch bugs, ask clarifying questions, and request additional commenting or documentation.

The data team at Future has at least one member of the team review each pull request to our codebases, and hosts a team demo (think “show-and-tell”) where team members walk through their approach to dashboards, analyses, and other deliverables. These sessions exhibit some of the clever implementations used to solve problems, and have sparked several productive discussions about last-mile edits.

Five to 10 team members — cross-train

Your team has now progressed to five members. With a few more onboardings under your belt, you have locked down the recurring questions and the common pain points. Now it’s time to remove yourself as the bottleneck to onboarding as well as normalize everyone sharing their work.

This may be the stage where you consider structurally building knowledge redundancy via cross-training. If only one person works on a specific part of the codebase, others on the team should know the basics. That goes whether the section in question is your marketing analytics or data engineering. Any team member should feel like they can go on vacation and not be interrupted when a small bug arises!

To be clear, we are not suggesting that you have data engineers build dashboards, or data analysts write data pipelines — nor are we suggesting that analysts rotate the business function they work with every few months to prevent lock-in.

If you’ve created your knowledge base and conducted pair programming as suggested above, you have a great start. To some extent, your team members will have a broad idea of what their peers are working on and how they approach their work.

Conduct basic cross-training with pre-recorded videos

One practice that’s great for both onboarding and cross-training is recording videos for your knowledge base. By this point, you understand the frequently asked questions from new hires as well as the most important aspects of your work. If you are doing a training session over Zoom, you can record it so others can watch it in the future.

Additionally, you can sit down in front of a key dashboard or piece of code, press record on Loom, and casually explain some of the design choices you made and anything else that seems notable. It doesn’t need to be scripted or perfect — no need to do multiple takes. But a 10 minute investment on one person’s part may save hours at some point in the future if someone else is now working on that same item.

Something for every team

Whether the headcount of your team is one, 10, or larger, there’s always going to be room to improve your team’s lottery factor. While you may never get 100% of knowledge distributed across your team, there are tactics you can employ at any stage.

How has your team addressed the lottery factor? We’d love to hear your ideas and experiences in Slack.