Or: How perspective changes when you’re the one asking the questions

Four years ago, I would have rolled my eyes at myself. Hard.

Back then, on the data team, I had strong opinions about the right way to access data. Product managers who wanted to query the production database replica instead of using our carefully crafted data warehouse? They were wrong. They were bypassing all the work we’d done to clean, validate, and structure the data. They were missing the forest for the trees, asking for quick answers instead of building sustainable insights.

Now, I realize… I was wrong. Well, sort of.

The Data Perspective

Let me paint the picture of my former self. Every day, my team and I spent hours building pipelines, designing star schemas, and ensuring data quality. We created dimension tables, fact tables, and carefully orchestrated transformations that turned messy operational data into something beautiful and queryable. The data warehouse was our masterpiece—a single source of truth where everything was clean, consistent, and ready for analysis. We designed it for a wider range of stakeholders and for longevity, thinking beyond the immediate needs of any single team.

When a PM would Slack me asking for a quick review of a query they wrote against the replica, I’d internally groan. Why are they ignoring all this infrastructure we’ve built? Don’t they understand that the data in the warehouse is better? More reliable? More complete?

I’d dutifully validate the query they wanted, but I’d also lecture them about why they should be using the warehouse instead. I’d explain the data quality issues in the raw tables, the potential for inconsistencies, the missing context that our transformations provided. I was convinced they just didn’t understand the technical complexities.

The Plot Twist

Fast forward to today, and I’m sitting on the other side of that conversation. I’m the PM now, and last week I found myself doing exactly what I used to criticize: asking our data team for validation of a quick query I wrote against the production replica instead of using the warehouse.

The irony wasn’t lost on me. But here’s the thing—I finally understand why PMs do this, and it’s not because we’re lazy or technically ignorant.

Why I Query the Replica Now

When I query the production replica, I’m working with tables I helped design, fields I named, and relationships I understand intimately. I know that user_events.created_at is the timestamp we care about, not user_events.updated_at. I know that `deleted_at` doesn’t actually mean deleted and that the status = ‘active’ is how we filter out test accounts. I know exactly which join conditions to use because I’ve seen the application code that creates these records.

But when I turn to the data warehouse, I’m suddenly a tourist in my own data. You go from a simple schema in postgres to some column in a table 15 levels removed from the raw data, transformed through a lineage DAG that would make a family tree look simple. What used to be user_events is now dim_user_activity or fact_engagement_events or something else entirely. The field I know as user_id might be user_key or customer_identifier. The simple boolean I understood as is_paid_user has been transformed into some complex calculated field with business logic I didn’t write and don’t immediately understand.

Maybe in the original product spec, the feature was called X, but by launch it was called Y, and then 6 months later was rebranded Z. A new hire on the marketing team wants to know about usage of the feature—they want to call it Z, and it’s probably called that in the data warehouse. But I know that for reasons lost to time, the column they actually want in production is X_enabled, even though no one remembers that name anymore.

There’s also the practical reality that new features often aren’t modeled in the core data warehouse tables yet. Sometimes it’s a backlog issue—it should be there but isn’t. But sometimes a brand new feature just isn’t important enough to get that level of support from the data team right away. When I need to understand how users are interacting with something we shipped last week, the replica might be my only option.

The Documentation Dance

I’ve written plenty of queries against our data warehouse, but the process almost always goes like this:

- Start writing what I think is the right query

- Realize I don’t know what the table is actually called in the warehouse

- Hunt down the documentation (is it in Confluence? Notion? That GitHub repo?)

- Find the table, discover it’s been renamed to something “more descriptive”

- Start mapping warehouse fields back to the application fields I understand

- Realize there’s some business logic baked into the transformation that I need to account for

- Finally write the query, but now I’m not 100% sure I got the nuances right

Compare that to querying the replica:

- Write the query using table and field names I already know

- Run it

- Get my answer

The cognitive overhead is completely different. When I’m in PM mode, trying to quickly validate a hypothesis or debug an issue, that friction matters enormously.

Sure, AI tools can help with some of this—they’re getting better at finding tables, explaining transformations, and mapping relationships. But they can’t give you the institutional context about why deleted_at doesn’t actually mean deleted, or the confidence that comes from having written the original application code yourself. The fundamental context problem remains.

The Familiarity Factor

There’s also something to be said for working with data structures you intimately understand. When I query the production replica, I’m not just accessing data—I’m accessing data with full context about how it got there. I know what edge cases exist because I’ve seen the application handle them. I know what the nulls mean because I understand the user flow that creates them. I know which timestamps to trust because I remember the conversations we had when we added that tracking.

The data warehouse abstracts away this complexity, which is usually a good thing. But sometimes, that abstraction removes exactly the context I need to interpret the results correctly.

The Numbers Problem

When PMs query the replica and data professionals query the warehouse, we often get different numbers. And both of us are “right.”

The data team has carefully filtered out test accounts, removed outliers, and applied business logic transformations that make sense for strategic analysis. When they report that we had 50,000 new signups this month, that number excludes the 500 test accounts created by the engineering team, the 200 spam signups that were later deleted, and the handful of users who signed up and immediately churned because they were clearly in the wrong place.

When I query the replica and see 50,700 new signups, I’m seeing the raw operational reality—everything that hit our database, warts and all. For my immediate question of “did our new signup flow break after yesterday’s deploy,” that raw number might actually be more useful because I want to see everything that’s happening, including potential new sources of junk data.

The result? We end up in meetings where the PM says one number and the data team says another, and everyone gets confused about whose number is “right.” Neither of us is wrong—we’re just answering slightly different questions with data that’s been processed differently.

This isn’t a bug; it’s a feature. But it requires us to be much more explicit about what we’re measuring and why. The warehouse gives us clean numbers for clean questions. The replica gives us messy numbers for messy questions. Both have their place, but we need to understand which we’re using and communicate that context clearly.

Different Questions, Different Tools

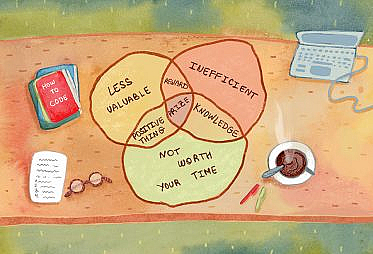

Business stakeholders and data professionals often ask fundamentally different types of questions, and these questions have different context requirements.

Data professionals typically ask questions like:

- “What’s the monthly trend in user engagement across all cohorts?”

- “How do retention rates vary by acquisition channel over time?”

- “What’s the lifetime value distribution for users who signed up in Q3?”

- “Can we build a reliable model to predict churn using this data?”

- “What’s our data quality score for this critical business metric?”

These are great warehouse questions. They benefit from clean data, proper joins, and historical consistency. More importantly, they’re analytical questions where the additional cognitive load of learning the warehouse schema pays off because you’re doing deep, sustained analysis that will inform multiple decisions over time.

PMs (and other business stakeholders) ask questions like:

- “Is the new checkout flow causing more payment failures?”

- “Are users actually clicking the button we just moved?”

- “Did fixing that bug yesterday actually fix the problem?”

- “How many support tickets did we get about feature X this week?”

These are operational questions where speed matters more than perfection, and where the overhead of context-switching to warehouse schemas often isn’t worth it for a quick validation that informs an immediate decision.

Of course, it’s not always so clearly black and white. Take a question like “Are we hitting our weekly active user target?” This could go either way depending on context. If it’s a well-established metric that’s part of regular reporting, the warehouse makes sense. But if you’re asking it mid-week because something feels off, or if there’s some quirk in how your organization defines “active” that makes the warehouse calculation unreliable for real-time monitoring, the replica might be the better choice.

The gray area questions are often the most interesting ones—and the ones where understanding both perspectives becomes most valuable.

The Handoff Problem

But here’s where things get complicated: what happens when my quick replica query becomes “the number”?

Last month, I ran a quick query against the replica to check how our new feature was performing. The number looked good, so I mentioned it in our weekly team meeting. That number made it into our team’s slide deck for the monthly business review. Suddenly, my hastily-written replica query—complete with my quick-and-dirty filters and best-guess business logic—became the official metric we’re using to judge the success of a major product initiative.

The data team probably has a different number in the warehouse. Theirs is almost certainly more accurate, more thoughtfully calculated, and better documented. But mine got there first, and now it’s “the number” everyone expects to see.

I’m not sure what the right answer is here. Should I have waited for the data team to build the proper analysis? Should organizations have clearer handoff protocols for when ad-hoc queries become official metrics? Should there be some kind of “certification” process before numbers make it into leadership decks?

When I was drafting this post, I didn’t really have a solution to this problem, but Caitlin Moorman suggested this rule of thumb: if multiple teams are making decisions based on the metric, or if it’s consistently used in exec team-level reporting (consistently being a vague guideline, but if it’s used multiple quarters after launch), it probably needs data eyes on it.

There’s also the reality that data can become a bottleneck—you can’t demand teams use only blessed metrics if you can’t keep up with the speed of the business, which hardly any data team can or should. The trick is finding the right balance between speed and rigor.

But Taylor Murphy raised an interesting question: whose job is it to actually worry about this transition? Most PMs probably don’t give a rip about it unless it causes pain in their day-to-day work. I happen to care more about it because I’ve been on the other side, but that’s not typical. Should the data team be proactively monitoring for ad-hoc queries that are gaining traction? Should there be some kind of escalation process when PMs realize their quick analysis is becoming important? I don’t think there are clear answers yet, but these are the conversations data organizations need to be having.

What I do know is that this happens constantly, and I don’t think most organizations have good processes for managing this transition from operational query to strategic metric. We need to figure out how to preserve the speed and agility that replica querying provides while maintaining the rigor and accuracy that warehouse-based analysis offers.

A New Appreciation

Being on both sides of this divide has given me a new appreciation for the challenges each role faces. Data professionals aren’t being pedantic when they push for warehouse usage—they’re trying to ensure data quality and prevent the technical debt that comes from ad-hoc querying. They’ve seen firsthand how inconsistent definitions and poor data practices can undermine trust in analytics across the organization.

Business stakeholders aren’t being reckless when they want replica access—they’re trying to make informed decisions quickly in a fast-moving environment. They need answers that are “good enough” to move forward, not perfect answers that come too late to matter.

The best data organizations I’ve seen don’t force everyone into a single pattern. They recognize that different roles have different needs and different levels of context. They provide both options and help people understand when to use each. They build real-time dashboards for operational questions and comprehensive reporting for strategic ones. They create self-service tools that let business users get quick answers without overwhelming the data team, while still maintaining the infrastructure for deep analysis.

The Confession

So here’s my confession: I’ve become the PM I used to hate, and I’m not sorry about it. But I’m also a better PM because I understand the data perspective, and I hope I’m helping my data team understand the business stakeholder perspective too.

The next time you find yourself frustrated with someone asking for “the wrong kind” of data access, take a step back and ask what they’re really trying to accomplish. You might find that there’s method to their madness—and wisdom in their seemingly foolish requests.

After all, the best data insights come not from perfect data, but from asking the right questions at the right time with the right tools. Sometimes that means the warehouse. Sometimes that means the replica. And sometimes, it means having the humility to admit that the approach you used to criticize might actually make perfect sense. And maybe, just maybe, it means data teams need to start thinking more like product teams—understanding their users’ real needs instead of assuming they know what’s best for them.

Special thanks to Nicklas Ankarstad for putting up with my shenanigans, to Taylor Murphy for regular thought partnership, and Caitlin Moorman for her incredible feedback.