Opportunities for the Modern Data Stack

The modern data stack (MDS) is the defining paradigm informing how new organizations use technology to leverage data. Lots of digital ink has been spilled, and takes are growing ever spicier on all things MDS. Here’s a more benign one:

The Modern Data Stack currently focuses too much on tooling and would be more effective if it refocused on the humans using the tools. In particular, the MDS ecosystem doesn’t currently address implementation, tool adoption and certain other non-technical oriented endeavors that are critical for long term value generation. As a result, stakeholders will forever complain about the lack of value they’re deriving from their data teams.

The Modern Data Stack largely focuses on technological solutions, some process improvement, and says very little about how people actually use data. I think there are two broad reasons:

- Engineering problems are easier than messy, human problems

- The Modern Data Stack is crafted with an engineering bias, perhaps because it’s easier (per the point above) or, because of where data sits in the engineering <–> business spectrum.

As Michael Kaminsky puts it (in our Slack chats on LO):

…data is unique compared to software in that data sits right where the software meets the meat-ware in an organization. To the extent that MDS tooling has been driven by “classical” software engineers, it’s reasonable for them to only think about the left-hand-side users of the tools (other computer systems, presumably reasonable software engineers) and not the right-hand-side users (the business that works in a political environment where the “right” decision cannot be logically deduced). So companies building tools in the data space need to make sure that they’re meeting those needs instead of just the systems-engineering needs.

For example, the MDS is great at offering solutions to specific classes of problems:

- Have a slow query? We can optimize indexes and file types. Done.

- Want to ingest a new data source? Great, just flip this toggle (worry about costs later…).

- Seeing conflicting revenue numbers? No problem, here’s a metrics layer and a data catalog.

These, and others, are great capabilities that I’m glad smarter folks than me are building. That said, these siloed solutions need to be integrated, not simply in a technology stack, but into an organization’s processes and culture. A metrics layer is only going to solve the debate that Marketing and Growth have about your LTV / CAC ratio when paired with people and process changes, since it’s as much (if not moreso) a human-oriented, biased decision making process, as an objective, technological one. Real change happens when people build consensus, take stock of their actions/implications and iterate, with tech setting the stage for the conversation.

To be clear, this is probably fine since the MDS is a tech stack that helps organizations leverage their data; not a recipe for how to drive value using data. That said, why else do we bother with this if not to drive value? If we want the MDS to help organizations become data-driven (or data informed or whatever), we need to address the people-oriented considerations that are dependent on, or a consequence of, what the MDS enables.

As someone who, in part, helps organizations configure and use the tools in the MDS, I’ve observed a few areas in which I believe we as a data community can bridge the gap between narrowly focused, strictly technological solutions, and human-oriented endeavors; the latter which span business functions and take many months for feedback, and is necessary for value creation.

Painless migrations

The Modern Data Stack provides an obvious and easy solution for organizations buried in manual, Excel-based workflows (caveat: sometimes Excel is the best solution for certain problems).

However as the teams and complexity of an organization grows, it becomes harder to wean folks off their favorite workflow. Take a migration from a collection of SQL scripts to dbt as an example. Some considerations include:

- Focusing on speed vs. quality. Should we stand up quick end to end models or double down on tests and definitions first to highlight data quality issues? What framework should teams use?

- Recreating output vs. building it correctly. Should we focus on reproducing old logic to show consistency (before and after a migration) or interrogate old assumptions (since dbt allows you to more easily and modularly understand business concepts that weren’t easily accessible in spaghetti SQL scripts)?

- Building consensus. It’s great that a budget owner approved the migration and resourcing required, but what about the folks who are getting most impacted? It would be great to get a playbook for working with engineers who may be concerned about their job duties after the migration. Or stakeholders who aren’t involved but are downstream consumers. Teams must represent and account for their needs early on during the migration process; a popular approach is running cross-functional working groups.

Above all, speed is the most important consideration when performing a migration or other change management. If stakeholders can see output really fast, they’re more likely to give you the opportunity to continue in the project of rebuilding and reconciliation. Plus speed enables faster feedback loops, with the benefit of enabling you to pay back tech debt faster, which will accumulate when opting for speed. You need to win over stakeholders fast and early, and continue delivering quick wins. A migration will be successful if it’s friction-less and folks are bought in.

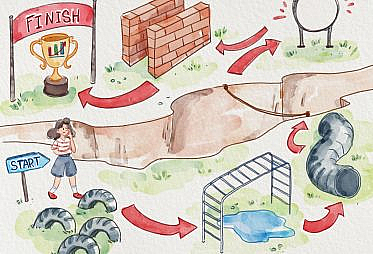

Tool adoption for the long term

Cool, so you have an awesome data alerting tool and you (the Data team) knows exactly where metrics are being defined and you’ve documented it. You’ve even invested in a discovery tool.

The problem is your stakeholders, while giving you the thumbs up the whole time and claiming they’d love an easier way to discover data, are no longer using the tools you’ve painstakingly researched and implemented. They fall into their old habits and inevitably you see an incorrectly defined metric on a Powerpoint slide somewhere.

We need to ensure stakeholders adopt data tools in the ways they should. Reading documentation and taking a training is not enough. We need to reinforce good data-tooling hygiene. I’ve seen many instances of folks starting out in a BI tool, and a few months later they’re back in Excel, pivoting a CSV and pasting it into a presentation. There should always be room for creative solutions and serendipity, but the Data team needs to keep an eye on how stakeholders use the tools they implement. Data models and BI tools need to adapt to business changes. Some practices to consider:

- Recurring training. In addition to holding BI tool training sessions for new hires, teams should hold recurring sessions for existing employees covering nuanced and emerging use cases. Teams must continually reinforce good practices. Office hours and data clinics are great, but are notoriously hard to be successful if they lack structure or default to a one-way information transfer where stakeholders come asking for help. Consider sending out an internal, opt-in newsletter about different features and capabilities that your data tooling can now perform. You can also do this in the context of demoing your work.

- Check in with stakeholders. I recommend taking both a passive and active approach. Look at metadata on the tool usage and see how frequently folks post charts and numbers across different communication channels. You can also send surveys across the organization asking people about the last thing they created using the tool. And nothing beats anecdotal conversations with users, like a product team would do.

- Interrogate use cases regularly. It may have been the case that the organization needed a certain tool at the time. Fast forward 12 months and the company has grown (or turned over) and use cases have changed. It would be great if data tools could provide a way for practitioners to reflect on how folks use the tools and when they should use it differently. Data tools do a great job of listing out their various features and capabilities, but it’s not always obvious when a data team needs to pivot to a new use case or try a different approach. There’s an opportunity here for data tools to partner with data teams along their journey.

Data teams are responsible for their organizations’ successful adoption of data tools, and there are lots of great thinkpieces out there on how teams can do this. That said, I’d love to see more best practices coming out of the MDS ecosystem helping to ensure long term adoption and value creation, vs. walking away after a “successful” implementation.

Cracking data governance

There has been a lot of progress in the data governance space — between obfuscating sensitive data (while retaining its value), increasing trust (via better observability and definitions), empowering users, etc… That said, this layer of the MDS will always involve people much more so than the other layers because so much of governance comes down to interpretation. As Michael alludes above, in business contexts, the “right” decision cannot be logically deduced and therefore requires people to get involved.

Working in a regulated environment

The only way to ensure a process or workflow is compliant is to ask your compliance team. And try as you might, you won’t ever remove them from the process or automate their decisions away. Oftentimes what Engineering thinks is compliant (let’s hash people’s names and DOBs) is not what the QA/compliance team thinks, which ultimately is what the CEO and executive team defer to. Usually what happens is that the key representatives, broadly including technology, the business, and regulatory, get in a room and attempt to figure it out. Ultimately this comes down to interpreting regulation, understanding what auditors care about, and doing your best to stay out of the news. Some ideas:

- Data visibility. Easily identifying all the data an organization has about an individual, and developing a set of options (mask, partially delete, obscure, do nothing, or some combination) is a helpful capability when threading the business and compliance needle. The ability to do this very simply (and iteratively) would go a long way for regulated businesses.

- Seamless data governance. Data governance in a box, including policies and procedures, appointing data security folks, cross functional governance rituals, documentation to share with auditors or regulators, how to talk about data to a regulator, training programs, etc. would be a great, tech-enabled solution so heads of Data don’t need to figure it out as they go, or say the wrong thing in front of the wrong person.

I believe the biggest unlock for the MDS in this space is making it easier for data teams to enable data visibility and optionality, and provide an easier way to have the governance conversation.

Improving data quality

The MDS has attempted to elevate the role of data trustworthiness in the stack in countless ways. Data trustworthiness could mean:

- Data pipelines are configured such that data arrives as expected and is complete

- Stakeholders understand how their data is defined and use it in ways congruent with their business needs

- Pipelines have tests to check for data integrity (e.g. unique IDs) or adherence to business rules (e.g. someone who signed up must have a valid credit card number)

I believe data teams fall back on writing more and more tests throughout their pipelines because tests feel free (they aren’t), and if some tests are good, perhaps more is better. It’s great that we can now write tests very easily, but test fatigue is a real problem. I’d love to see tooling that is opinionated on how to write good tests to solve specific problems, and how to build a culture and ecosystem of better data quality in general. We need to have a better story around things such as:

- Test visibility. Visibility for test coverage across all pipelines. One of the failure modes of writing too many tests everywhere is that it’s hard to reason around the impact of failing tests downstream and can lead to inconsistent signals and remediation measures. Consider having a master dashboard of all tests and their statuses, and making it someones job to stay on top of it.

- Create a RACI (or similar) for tests. Teams should identify and assign owners to deal with failing tests. That individual can create rituals around it, put in a framework, leverage others, etc. If their performance review depends on it, it’ll get solved quickly. Furthermore, a team should publish an SLA congruent with the urgency and importance of tests that fail, and have ways to understand how frequently that SLA has been achieved. It would be great to embed this somewhere within the infrastructure, instead of, say, ad-hoc Slack notifications and games of “not it”.

- Determine the impact of testing. Teams should quantify the increased data trustworthiness as a result of tests. They could do so by surveying consumers, looking at metadata on tool usage over time, and anecdotal evidence based on conversations with key stakeholders. And iterate.

Finally, related to data tooling adoption, we should continue to check in with stakeholders on their levels of data trust, including related to changing metric definitions. As organizations grow, new people and functions are added while old ones turn over, so metric definitions and use cases need to evolve.

Refocusing on the humans

The MDS and the current moment is a unique time to be in data. There is a lot of promise, and some of the technologies being built will be here to stay (though consolidation is real). The baseline is being raised.

At the same time, we shouldn’t forget that humans are using these tools, in their biased, political and erroneous ways. If we want our data stack and data team to continue appearing above the line in annual budgets, let’s be empathetic to our users and their stakeholders. Let’s meet the people where they are and help enable them to drive value out of their data.

If you have more thoughts, I’d love to hear them over on the Locally Optimistic Slack!